SAP recently released its Business Objects Enterprise 4.0 Business Intelligence (BI) platform. The new version made updates to enhance both the end user experience and the administration of the application.

End Users

The new home page for Business Objects 4.0 is the BI Launch

pad. Formerly called InfoView, BI Launchpad welcomes users to Business Objects with a home page similar to iGoogle. Widgets displayed on the home page include My Applications, My Recently Viewed Documents, Unread Messages in My Inbox, My Recently Run Documents, and Unread Alerts. A ‘Widget’ is a user interface allowing quick access to data or an application. The BI Launchpad also features tabbed browsing.

When logging in, users have two default tabs, a home tab (previously mentioned) and a documents tab. The Documents tab gives users the old InfoView Folders view featuring Favorites and Inbox. Another advantage of tabbed browsing is the ability to have multiple reports open at once, a feature Business Objects users have wanted for awhile now. Users have the option to pin reports/documents, making them available for quick access each time the user logs into the system. Below is a screenshot of the BI Launchpad.

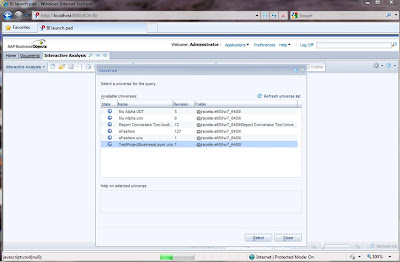

Another user tool modified for the Business Objects 4.0 release was WebIntelligence. WebIntelligence is also called Interactive Analysis for this release. The best feature added to the WebIntelligence tool is a raw data view available in the query panel. Users simply click a Refresh button when building their query and 15 rows of raw data are presented allowing for a quick analysis of the returned data set sample. See the screenshot below for an example of the Data Preview feature.

This allows the user to modify the query if the expected results are not shown before running a larger report. Other highlights include more chart types with additional chart features, toolbars in ribbon form similar to Office 2007, and greater consistency between the Java and Web Interfaces.

Other user tools updated with the Business Objects 4.0 release include Desktop Intelligence, Xcelsius, Life Office, Voyager, and Explorer. Desktop Intelligence is eliminated from the Business Objects Enterprise package. All Desktop Intelligence reports must be converted to either Crystal Reports or Web Intelligence reports. The report conversion tool can be used before or after the Business Objects 4.0 upgrade is performed. Xcelsius is still available but has been renamed to Dashboard Design. Live Office is incorporated within the release, allowing BI content to be more accessible throughout the Microsoft Office suite. Voyager, Business Objects’ OLAP data analysis tool, has been replaced with Advanced Analysis. Advanced Analysis highlights an enhanced task and layout panel view to improve productivity and depth of analysis for multidimensional data. SAP Business Objects Explorer is a data discovery application that allows users to retrieve answers to business questions from corporate data quickly and directly. Explorer is installed as an add-on to Business Objects Enterprise 4.0, and can be integrated with the BI Launchpad.

Administrators

Administration updates have been made throughout the Business Objects 4.0 Enterprise.

The look and feel of the Central Management Console (CMC) is similar to that of BOXI 3.0, although several features have been updated for greater user administration. Auditing has been updated throughout the release. The CMC Auditing feature allows administrators to modify what is being audited. Monitoring is now available through the CMC. Monitoring allows administrators to verify all components of the system are functioning properly. Response times can be viewed and CMS performance can be evaluated within the Monitoring feature. See the screenshot below for the Monitoring interface.

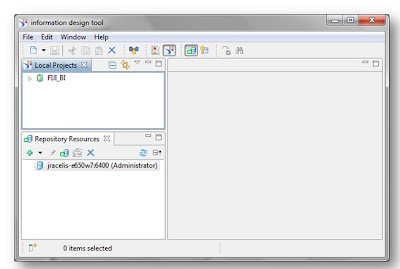

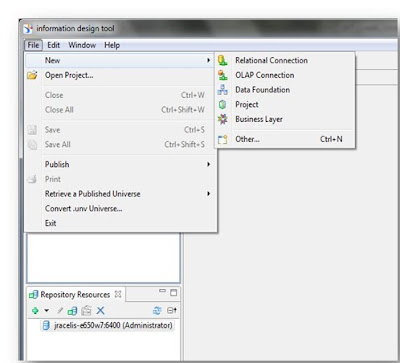

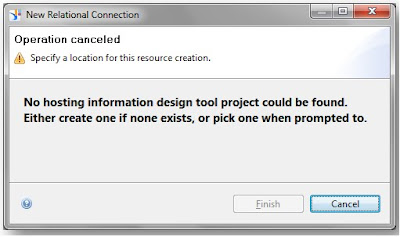

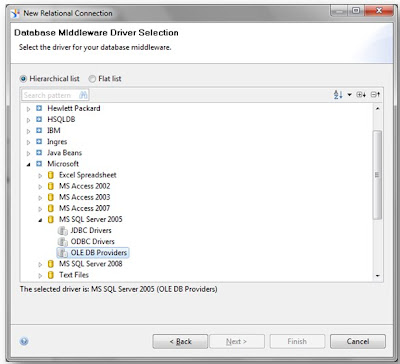

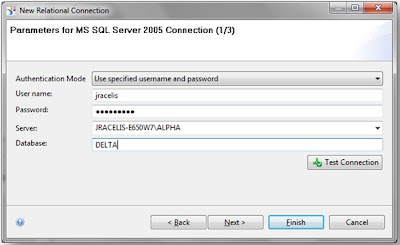

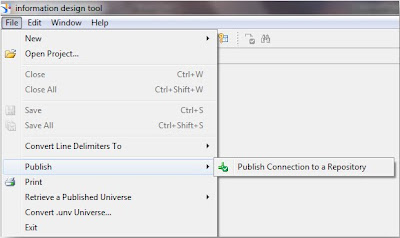

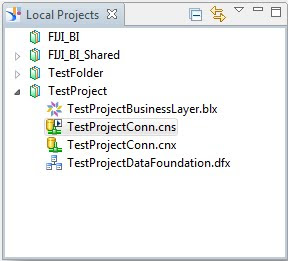

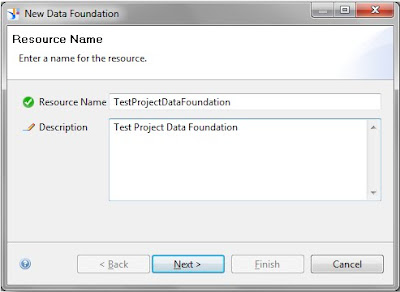

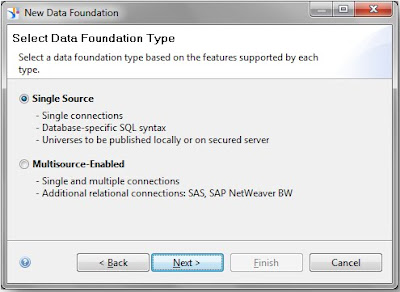

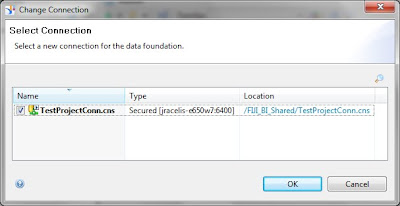

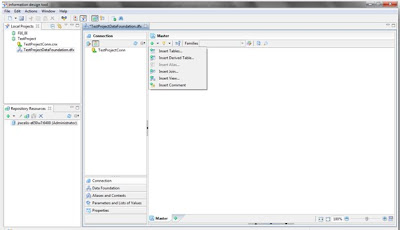

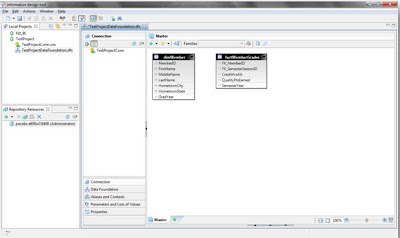

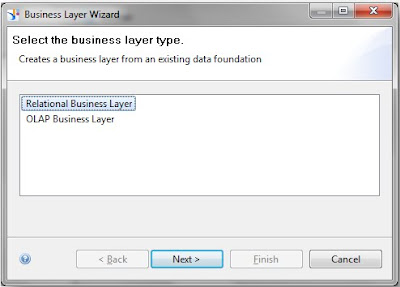

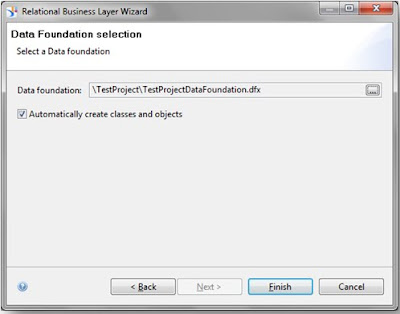

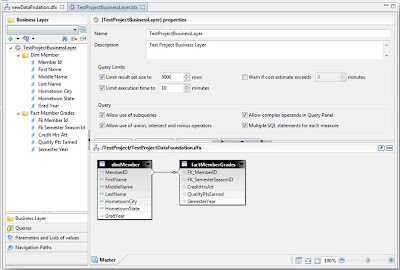

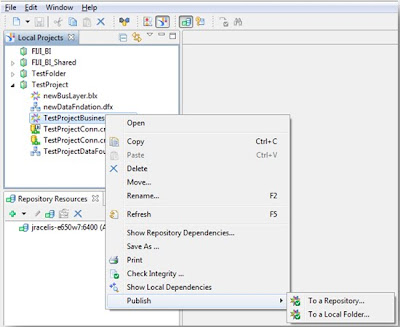

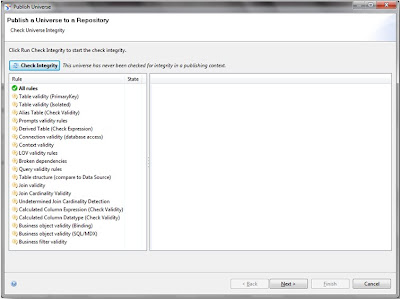

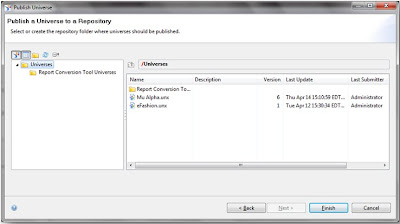

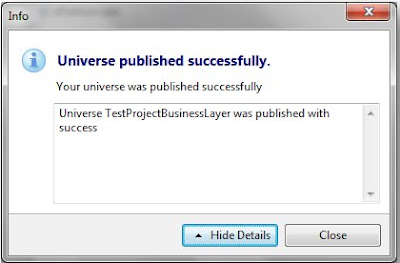

Universe design received an update in the 4.0 release with a new tool called the Information Design tool. The highlights of the tool include the ability to create multisource universes, dimensional universes that support OLAP dimensions and hierarchies, and easier management of repository resources. Each universe will now consist of three files (or layers), a connection layer, a data foundation, and a business layer. The connection layer defines the connections used for universe development. The data foundation layer defines the schemas being used for a relational universe. The business layer is the universe created based on your data foundation. Resources can be shared, allowing connections and data foundations to help create multiple business layers (or universes). Below is a screenshot showing the Information Design Tool. The upper left shows the project with the different layers created. The bottom left shows the shared repository resources. The right side is similar to previous versions of Business Objects Designer.

Import Wizard has been replaced by two separate tools, the Upgrade Management tool and the Lifecycle Management Console. The Upgrade Management tool allows for direct upgrades from Business Objects XI R2 SP2 or later. The new easy to use interface allows upgrades to go more smoothly. The Lifecycle Management Console is a web-based tool which gives administrators a way to handle version management. Rollback and promotion of objects is available among different platforms if the same version of Business Objects is being used.

Getting to 4.0

Business Objects 4.0 is a complete new install, an upgrade option is not available. To migrate over specific application resources (Universes, CMS data, etc.), older versions of Business Objects (5.x, 6.x) must be upgraded to Business Objects XI R2 or later first. The deployment of 4.0 has also been simplified through the use of a single WAR file for web application deployment. Business Objects servers and web application tier can only be installed and run on 64-bit operating systems.