Recently, a Guident team completed a data cleansing effort that helped a large federal agency centralize data for a new Business Intelligence system. The team took data from dozens of agency sources and put it through a systematic process of cleansing and matching to produce a consistent data set of the millions of companies that interact with the agency daily.

The ProblemThe new BI system for Guident’s agency client was designed to show different activities for each company the various agency departments deal with, including inspections, permits, adverse events, infractions, etc., all of which could come from dozens of different sources, in varying formats. All of this data was centralized and shown to personnel from all the different departments, allowing them to coordinate their activities, and to assign resources much more efficiently, since there was a limited number of agency personnel and millions of companies.

In order for the system to work, the name and location of each company needed to be consistent throughout the agency, and each activity, regardless of which department it originated from, would need to be properly associated to the correct cleansed company information.

Oh, and of course, time was limited because the new BI system was to go into User Acceptance Testing in 3 months for a scheduled spring launch.

Dirty Data

You’ve seen the situation before: different departments of an organization capture the same information in different ways. Some departments allow for free form data entry, which means the name of a company may be written as “IBM” or “I.B.M.” or “International Business Machines” or even “Int’l Biz Mach” – and those are the versions without misspellings. Some departments allow selection from dropdown lists, but those lists may not be complete enough forcing personnel to make judgments about just which location to select. Other departments use codes to stand for the real data – but the hidden data may not have been updated in a while.

After checking the many source databases, team analysts found themselves asking: just how many different versions of the word “Corporation” are there?

Cleansing and Matching

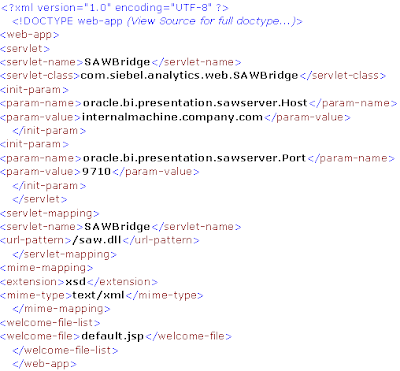

Company information was gathered primarily through each activity record. For example: an inspection had the name and address of the company facility inspected, primary contact information, the date of the inspection, the results of the inspection, any associated infraction information, the inspector’s information, etc. The team’s plan involved a two phase cleansing and matching method:

- All company information from new activities was first matched against an existing table of unique company names, TB_CENTRAL.

- To determine a match, the company facility name and address information was “standardized” – each word went through a function that converted it into a standard format. For example: if four separate records had “Ave.”, “avenue”, “Av”, and “AVN”, they would all be standardized as “AVE”. The full standardized company information would then be compared to the existing standardized company information already existing in TB_CENTRAL.

- If a match was found, then that new activity would be associated with the existing matched company.

- If no match was found, then the company information for the new activity was added to TB_CENTRAL, and the new TB_CENTRAL record received a code of AWAITING_CLEANSING.

- All records in TB_CENTRAL with the code of AWAITING_CLEANSING were sent to an outside data matching and cleansing service (such as Dun & Bradstreet or Lexis Nexis), which would return the records with the best match they could find.

- Each returned company facility record went through step 1a and 1b again.

- Companies that failed out of 1a and 1b were added as new cleansed records into TB_CENTRAL.

Figure 1: Centralized company information cleansing process

Great Results…and Lingering Issues

This method of data cleansing was very effective, producing a huge company cleansed data center that handled an initial batch of millions of records, and continued to handle hundreds of new activities on a daily basis. This new data center was so effective that owners of systems other than the BI system want to use it as their official source of company data.

There were, however, two important issues to deal with. First, even with the high record of matches, there were still activities whose company info was just not going to be cleansed. The team had decided that any important missing address information (missing state or zip code) would disqualify that record from the matching process. But the activities that matched those dirty company records were still important to the various departments of the federal agency. The team and the agency client decided that the best way to handle this data quality issue was to deliver report details and statistics to the departmental source owners for handling.

The second issue was that from a testing point of view, data had changed. For example, previously there may have been a list of 3 company facilities all which had different spellings of the same address (“Saint Paul, MN”, “St. Paul, MN”, “Seint Poll, MN”), but with any close scrutiny, anyone would conclude that they were the same address. If record 1 had 5 activities, record 2 had 20 activities, and record 3 had 1 activity, under the new company cleansed data center there would be just one company record with 26 associated activities. This was expected, but it also introduced the need to carefully train the client personnel during User Acceptance Testing to be aware of changes in certain expected results.

But having to explain to your user community why their new system really is better is a good problem.

Tools Involved in this Project

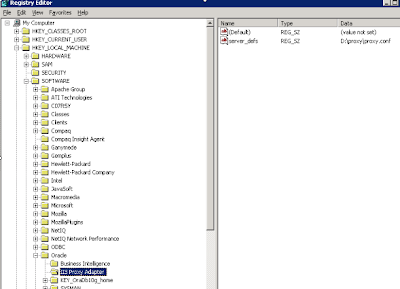

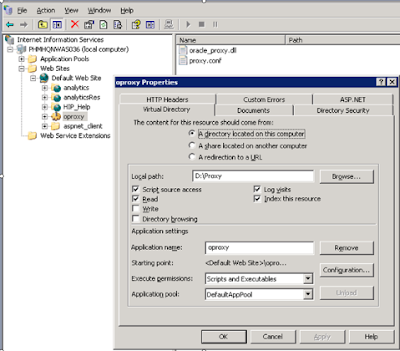

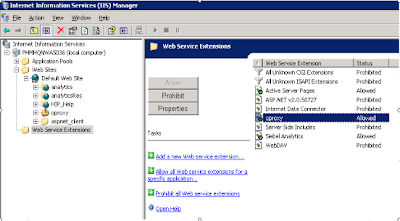

- Oracle Business Intelligence – agency BI system, reporting.

- Informatica – ETL from agency department data sources into the company cleansed data center, and data analysis.

- Oracle 10g – databases, SQL procedures and functions.

- Erwin – data modeling of the company cleansed data center.