What is DAC?

Oracle Business Intelligence Data Warehouse Administration Console (Oracle DAC) provides a centralized console for schema management, configuration, administration, loading, and monitoring of the Oracle Business Analytics Warehouse. The Data warehouse Application Console (DAC) is used to create and schedule Informatica ETL workflows. It manages the following data warehouse load processes:

- Dynamic generation of subject areas and execution plans

- Dynamic settings for parallelism and load balancing

- Intelligent task queue engine based on user-defined and computed scores

- Index management for ETL and query performance

- Embedded high-performance OTLP change capture techniques

- Restart from any point of failure

DAC / ETL Statistics

The metadata for ETL is stored in the DAC repository that is composed of approximately 120 tables. These tables store the ETL metadata such as tasks, task steps, execution plan details, connection details, run history, etc.

In this exercise we are only interested in the run history table - W_ETL_DEFN_RUN. This table has the ETL execution details for every Execution plan that was run through DAC (see screenshot below).

We will be walking you through the steps for creating a simple solution that will allow you to analyze the metadata in W_ETL_DEFN_RUN in OBIEE.

RPD

Below is a simple RPD model that is based on the single W_ETL_DEFN_RUN table from the DAC repository.

Reports

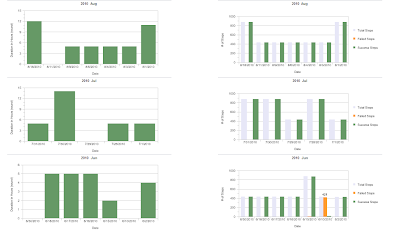

Using the subject area (Statistics – ETL) shown above, you can build useful reports to analyze the execution history (for each execution plan type).

1. Current month summary view with duration in minutes & hours.

2. Snapshot of run duration for each month.

Notice the failed steps in orange below.

3. Report showing Error / Status description with number of steps – Total, Failed, and Success.

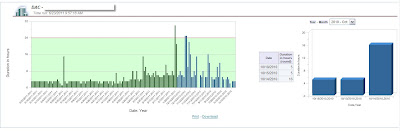

4. Compare the ETL for large duration (2010 and 2011).

By adding other repository tables into your RPD you will be able to develop more sophisticated reports. You can even have error reports delivered to you with iBots whenever ETL errors occur.