Enterprise Findability: Leveraging Synergies between the Common Electronic Repository and Enterprise Search This paper describes synergies the organizations can achieve if Enterprise Content Management (ECM) and Enterprise Search technologies are considered and implemented together.

Many organizations are required to identify, retain, and share mission-critical information efficiently. Historically, individual departments in an organization took responsibility for assuring appropriate retention and access. However, the increasing complexity of regulation mean that this mission-critical information increasingly applies across entire organizations. Information in one department may be relevant to another department, as together they work to provide consolidated information to the external world.

A key challenge is to achieve the best use of information assets in its repositories. The goals are to eliminate duplicate content, maximize its reuse, and assure that information is protected and accessible. These goals summarize the concept of findability. In essence, findability is the art and science of locating information in or about electronic documents. People want to find answers, not search for them. AIIM, the industry Association for Imaging and Information Management, says in a 2008 report that “effective Findability retrieves content in context. Therein lies the crux of Findability. It cannot be attained simply by search, even a powerful search.” (AIIM MarketIQ, 2008). Improving findability requires a cooperative strategy, achieved by combining complementary technologies and systems. Findability is critical to effective use of information at many organizations.

No organization today can afford to duplicate assets or investments, whether in enterprise software or knowledge assets developed by its workers. Savvy organizations instead are adopting Information Lifecycle Management (ILM) practices. These ILM practices are “based on aligning the business value of information to the most appropriate and cost effective infrastructure.” (SNIA, 2004). ILM practices recognize that multiple technologies are critical to attaining desired organizational outcomes.

Findability Efforts at a Large Government Agency: An Overview In 2006, a large federal government agency recognized the critical role of its information and resources by creating a Board to better coordinate IT investment. The board also initiated a set of enterprise-wide initiatives aimed at modernizing its IT systems. Among these initiatives is creation of a common electronic document repository, whose objective is to integrate individual repositories and contain the vast majority of documents created or received by the agency. This would:

1. Improve access to the content and its associated metadata, and

2. Facilitate reviewers’ and others’ ability to do their jobs effectively and efficiently.

More recently, the agency launched an Enterprise Search initiative to provide agency wide searches of its information repositories, one of which would be the Common electronic repository.

These two unfolding projects position the agency to plan and implement them in concert to meet Information Lifecycle Management best practices: increase findability, with maximum effectiveness and minimum cost.

Here is how both support findability.

Findability: Concepts and Technologies Because of their interlocking components, a variety of technologies can enhance findability. Organizations seeking to enhance findability should select whatever combination of technologies that best meet their needs. The agency has already determined that two critical, enterprise technologies are needed to attain findability: Common electronic repository and Enterprise Search. Together these can overcome an agency's findability challenges:

1. Multiple silos of information that segregate potentially useful content into individual repositories,

2. Multiple sets of metadata and terminology, making it challenging to identify all potentially relevant content,

3. Rapid growth in content that burdens storage and hinders implementing electronic record policies.

Attributes, properties, and metadata all refer to the same thing: information about, not inside, the content. Both a Centralized Electronic repository and Enterprise Search will use metadata. Organizations will enable employees to find and use what they need and when they need it by identifying the synergies of these two systems.

How the Centralized Electronic Repository Enhances Findability A Common Electronic Repository increases findability by:

1. Providing a hierarchical folder structure that shows content groupings and relationships

2. Associating metadata with content, providing document context and enhancing internal search of the content

3. Supporting the setting of security levels and other access controls

As an ECM system, the Common Electronic repository provides a hierarchical folder structure (or taxonomy) for content storage. By merely looking at this taxonomy, users can understand important content groupings and their relationships.

Another key feature of ECM systems for a Common Electronic Repository is their capability to associate metadata with content. Metadata adds additional context to the content, helping users better understand how, when and why the content was created. For example, each piece of content in a Common Electronic Repository will have several common metadata attributes such as “Document Authors,” indicating whom to contact for more information.

Metadata can be designed to use controlled, predefined lists of keywords. A specific attribute such "drug additive" could contain only one of a small set of values. By constraining the list of values with one like “Drug Evaluation and Research,” Enterprise Search will return more relevant results. Enterprise Search would not need lists of synonymous names.

A Common Electronic Repository also supports the setting of security levels and other access controls. These also can provide context for the content. Content might be considered available for limited release, such as within a specific research group, or have constrained usage based on specific time periods. Access controls also reduce visual clutter, since users see only what they have rights to see, and they can change content only as policy permits.

In summary, a Common Electronic Repository will enhance findability. The system’s folder structure and metadata are shared. Folders provide additional relevant context. The system allows content to cross organizational boundaries, enhancing findability. The organization also establishes a shared understanding of the domain and its content.

How Enterprise Search Enhances Findability Enterprise Search will also play an important role in findability. That is why enterprise search systems are among the first technologies organizations consider as they wrestle with findability challenges. The most basic enterprise search function is to generate indexes for content items. For example, search systems generate indexes of key words to search content. Search systems also provide relevance ranking. However, credible relevance ranking requires advanced Enterprise Search features. Incorporating these advanced features adds additional value to findability:

• Create and manage organization specific thesauri. This helps a user searching for a specific word missing from documents of interest. Thesauri help the search systems return all documents of interest by finding those containing words meaning the same, but spelled differently, from what the user searched for.

• Support Term weighting. This identifies those terms that center users might find more important than others, when all have similar meanings. Term weighting, combined with Thesaurus support, enhances findability.

• Provide natural language processing. This allows Search to analyze content beyond merely identifying key words. For example, a document that contains the word “bush” could be analyzed to determine whether it was about a United States president or a type of vegetation.

Since a Common Electronic Repository will contain both internal and external content from large numbers of sources, the Enterprise Search system’s natural language support will help searchers sift through these different kinds of information.

Because Search systems work with indexes created from content throughout the enterprise, they can find relevant content no matter where it is stored. No navigation through a pre-set folder structure is needed. Such navigation requires choices which may not be intuitive when a user is not familiar with the domain.

In summary, Enterprise Search will play an important role in meeting an organization’s findability needs. Because an organization cannot pre-determine all relevant organizational structures, or other context for content, Enterprise Search will provide the opportunity to avoid dealing with specific folder structures, such as those in a Common Electronic Repository, and still find useful content.

A Common Electronic Repository Provides Value to Enterprise Search One of the limitations of any enterprise search system is its brute-force nature. Search systems operate primarily on individual words, which by themselves are isolated from context. The result is that users often have to wade through large lists of search results to find what they really are looking for. An Electronic Content Management system is a good source of context to add value to a Enterprise search engine and can also reduce the length of those lists. A Common Electronic Repository can help organize search results by providing groups (“facets”) of Enterprise Search results. A good source of those facets is the Electronic Repository folder taxonomy.

Enterprise search systems can also use folder names to refine search results by allowing a search restricted to a particular branch in a folder hierarchy. Many search engines also allow advanced use of dictionaries and thesauri. Since every organization is unique, these dictionaries are generally not available “out-of-the-box” but instead must be built to reflect the organization’s vocabularies. However, a Common Electronic Repository folder structure could serve as an initial set of preferred terminology for Enterprise Search dictionaries, rather than requiring an organization to create that starter dictionary from scratch.

Enterprise Search can index metadata in a Common Electronic Repository to focus the types of searches available, again providing context to the content. The investment made adding rich metadata values to a Common Electronic Repository becomes immediately available to Enterprise Search. For example, a user might want to see content related to the a specific drug Lisinopril, but only when that document was written as part of a site inspection.

By making use of Common Electronic Repository metadata, an Enterprise Search query could say in effect “show me only those documents containing the word ‘Lisinopril’ which also have been tagged as a ‘site inspection’.”

Search provides value to ECM

Just as the Common EDR will add value to Enterprise Search, Enterprise Search can greatly enhance the value of an Common Electronic Repository. Like all ECM systems, a common Electronic Repository provides structures to store and process content according to an organization’s business rules. However, a Common Electronic Repository can provide only rudimentary searching.

• Enterprise Search will provide richer searching than basic search that is part of the Common Electronic Repository. By reusing metadata already describing content in the Common Electronic Repository, Enterprise Search can provide more relevant search results.

• By supporting dictionaries (such as lists of synonyms), Enterprise Search can provide additional ways to find content when the Common Electronic Repository folder names don’t match a user’s search query.

Enterprise Search will also provide a findability alternative to navigating a Common Electronic Repository folders. Rich Enterprise Search features can even allow a searcher to influence the search process to create his or her own context, as opposed to the one represented by the single Common EDR folder structure.

Lastly, Enterprise Search will provide another important feature: search logs. Search logs provide a record of what search queries users ran. Search administrators can analyze these logs to show how content is used, and logs can even suggest possible changes to the Common Electronic Repository folder structure, metadata elements and values.

Leveraging the Synergies

To repeat, neither Enterprise Search nor a Common Electronic Repository alone can provide a complete findability solution. Implemented together, they not only support richer findability, they do so more efficiently than either by itself.

A Common Electronic Repository, with its pre-set folder structure, and Enterprise Search with its ability to cross storage locations, provide two different approaches to finding content. Both approaches will be valuable depending on each user’s particular needs. One person familiar with the Common Electronic Repository folders may find navigating its folders faster and more effective than using Enterprise Search, which might seem more “scattershot.” Another person, unfamiliar with the Common Electronic Repository, could prefer Enterprise Search for rapidly finding relevant content. For that user, navigating through unfamiliar folders and reviewing content within each folder might be cumbersome.

A key operational challenge for deploying any enterprise search system is building connections to various ECM systems and translating their metadata elements to those used in the Search system. Integrating most content into one repository, the Common Electronic Repository, reduces the number of bridges and maps for Enterprise Search. This in turn reduces initial implementation cost as well as ongoing maintenance costs. Failure to consolidate content into the Common Electronic Repository would increase costs as the number and size of island repositories increases. Enterprise Search system administrators would have to spend ever-increasing resources to maintain those ECM system bridges and maps. Over time, the result would be a babble of inconsistencies, reduced relevancy, and decreased confidence in the Enterprise Search system’s results.

Deploying both a Common Electronic Repository and an Enterprise Search system also reduces the costs of governance for each. A single set of centralized governance processes applied to Common Electronic Repository content and folder structures minimizes costs, since only one folder structure needs to be reviewed, updated, and managed. Enterprise Search system governance decreases since metadata and the meaning of taxonomy nodes in the Common Electronic Repository are stable, predictable, and understood by Enterprise Search users.

Conclusions

With a Common Electronic Repository and Enterprise Search working together, they achieve findability levels unavailable to each alone. Each system brings unique advantages to enhancing findability. Implementing both Enterprise Search and a Common Electronic Repository is critical to reducing costs, getting best use from technology investments, and achieving the level of findability that an organization's mission requires.

References

AIIM MarketIQ (Q2 2008) “Findability: The Art and Science of Making Content Easy to Find. http://www.aiim.org/Research/MarketIQ/Findability-7-16-08.aspx

SNIA: Storage Networking Industry Association. (2004). Information Lifecycle Management: A Vision for the Future. http://www.snia.org/forums/dmf/programs/ilmi/ilm_docs/SRC-Profile_ILM_Vision_3-29-04.pdf (accessed March 10, 2010).

For more information on these topics, go to

http://www.guident.com/ or contact the author directly at

mailto:rweiner@guident.com.

Traditionally, a star schema has a single fact table with many dimensions. The dimensions are related to many fact records in a one-to-many relationship to the fact. However, sometimes the relationship of the data is many-to-many. An example for this comes from the healthcare industry where one doctor visit record can be associated with multiple diagnosis codes.

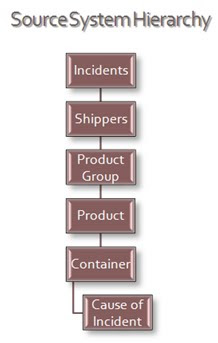

Traditionally, a star schema has a single fact table with many dimensions. The dimensions are related to many fact records in a one-to-many relationship to the fact. However, sometimes the relationship of the data is many-to-many. An example for this comes from the healthcare industry where one doctor visit record can be associated with multiple diagnosis codes. We had to create reports at a detail level called “cause of incident” for damages or injuries captured in aggregate at the incident level. The challenge was to attribute all damages in an incident to each cause without double counting damages or injures at the grain being reported.

We had to create reports at a detail level called “cause of incident” for damages or injuries captured in aggregate at the incident level. The challenge was to attribute all damages in an incident to each cause without double counting damages or injures at the grain being reported. Unfortunately, bridge-tables require a weighting factor. Since the measures existed in the source system only at the summary level, the weighting factor would not correctly attribute fatalities to each detail level item. For example, an incident with 2 fatalities occurred. The incident was attributed to have been caused by an accident and fire. When counting the number of deaths because of fire the business rule is to count 2 for fire not 1 as a weighting factor of .5 would do.

Unfortunately, bridge-tables require a weighting factor. Since the measures existed in the source system only at the summary level, the weighting factor would not correctly attribute fatalities to each detail level item. For example, an incident with 2 fatalities occurred. The incident was attributed to have been caused by an accident and fire. When counting the number of deaths because of fire the business rule is to count 2 for fire not 1 as a weighting factor of .5 would do.

The downside is that grand totaling does not work correctly, which did not cause a problem for our requirements, though. If your client’s business rule is to attribute summary level measures equally across the details then a bridge table will work with the appropriate weights. If you need to have multiple many-to-many details using un-weighted summary level measures this solution will work. In summary, this method may not work for every project but for some business requirements it will make a challenging scenario work.

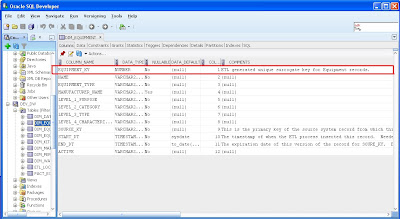

The downside is that grand totaling does not work correctly, which did not cause a problem for our requirements, though. If your client’s business rule is to attribute summary level measures equally across the details then a bridge table will work with the appropriate weights. If you need to have multiple many-to-many details using un-weighted summary level measures this solution will work. In summary, this method may not work for every project but for some business requirements it will make a challenging scenario work. For performance optimization reasons, we recommend to create a composite index on business_key and check_sum.

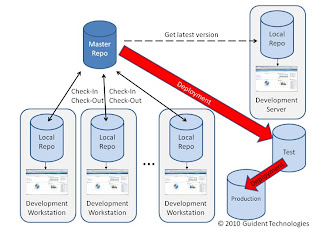

For performance optimization reasons, we recommend to create a composite index on business_key and check_sum.

In all cases, the joins against the dimension table will be based on index lookups because we have a composite index on business_key and check_sum. Therefore, identifying new or updated records is quite efficient. The drawback of this solution is the necessity to perform a full data load into the staging area, which may not be feasible for large source systems.

In all cases, the joins against the dimension table will be based on index lookups because we have a composite index on business_key and check_sum. Therefore, identifying new or updated records is quite efficient. The drawback of this solution is the necessity to perform a full data load into the staging area, which may not be feasible for large source systems.

Step 4: Use ERwin’s Report Builder to create a Data Dictionary document. Report Builder queries the ERwin data model to create high quality PDF, Word, XML, or HTML documents that can be used as client deliverables. The screenshots below show the basic steps and a sample RTF output file.

Step 4: Use ERwin’s Report Builder to create a Data Dictionary document. Report Builder queries the ERwin data model to create high quality PDF, Word, XML, or HTML documents that can be used as client deliverables. The screenshots below show the basic steps and a sample RTF output file.