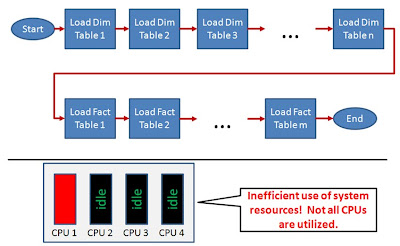

Thus, in order to utilize all available CPUs on a server, ETL tasks should be scheduled to execute in parallel. Scheduling a highly parallelized ETL workflow requires some planning as all ETL task dependencies need to be understood first. For example, if DimTable1 and DimTable2 are dimension tables for FactTable1, then DimTable1 and DimTable2 need to be loaded first before FactTable1 can be loaded. In addition, if there are no dependencies between DimTable1 and DimTable2, then we can execute their specific ETL processes in parallel.

The output of this dependency analysis is an ETL Task Execution Order Map, as outlined in the figure below. The ETL Task Execution Order Map will then be the template for the ETL workflow layout. As each task will utilize an available CPU, this approach will be able to utilize multiple (if not all) CPUs and provide better performance.

One word of caution, though: The number of parallel scheduled tasks should not significantly exceed the number of CPUs on your server. If there are many more concurrent tasks than CPUs, then the overhead of the OS scheduler switching jobs in and out of CPU time may actually decrease performance.

To avoid this problem, many ETL tools (such as Informatica Power Center) provide a global setting that limits the number of concurrently processed tasks regardless of how many tasks are scheduled to run in parallel. Let us call this setting N. As a general rule, N should be set to the number of CPU (cores) of the server. In this case, one should schedule as many tasks in parallel as outlined in the ETL Task Execution Order Map. This option is very useful in environments with different number of CPUs in the development, test, and production hardware as we do not have to create workflows based on the underlying hardware constraints. In this case, we just set N in each environment to the respective number of CPUs.